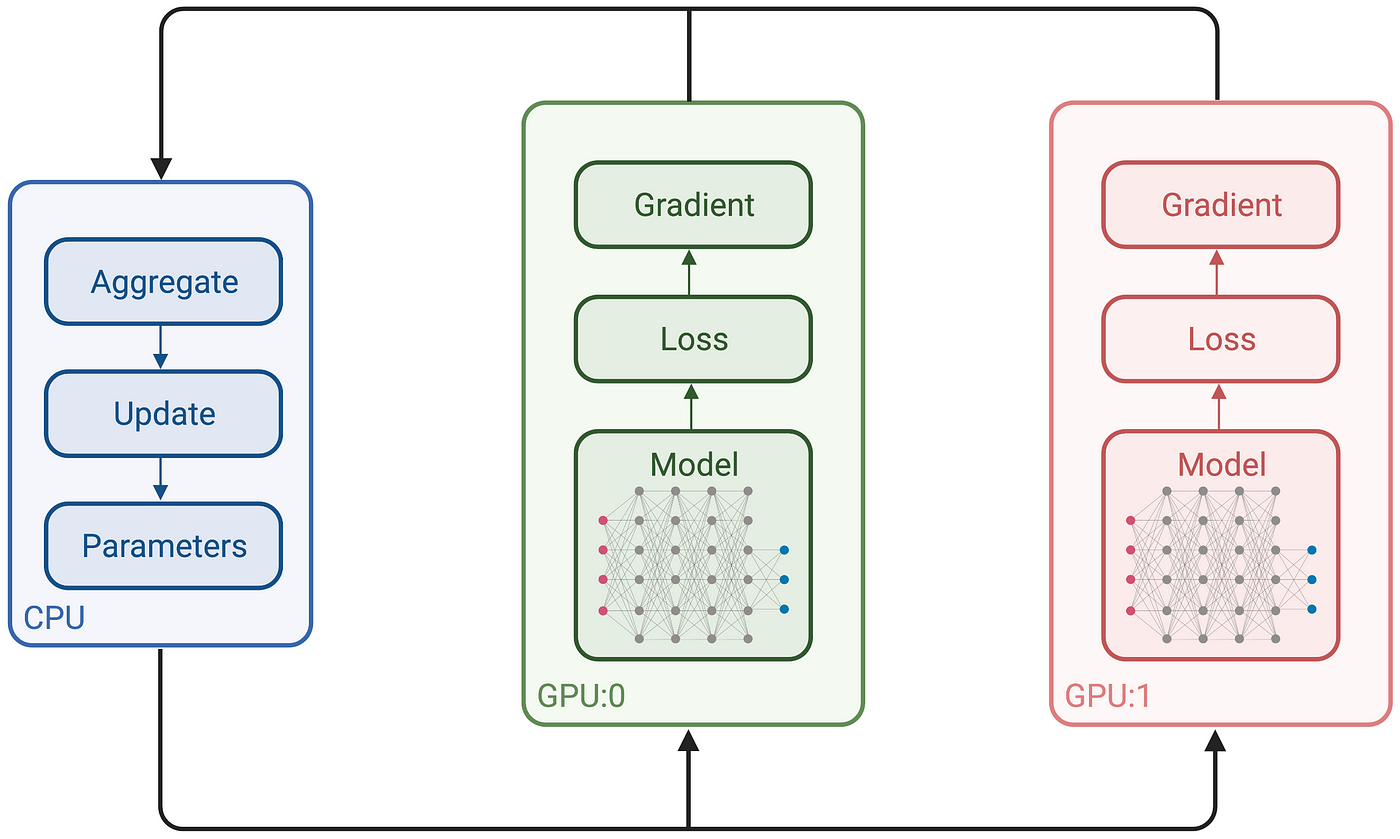

GitHub - sayakpaul/tf.keras-Distributed-Training: Shows how to use MirroredStrategy to distribute training workloads when using the regular fit and compile paradigm in tf.keras.

GitHub - yeamusic21/DistilBert-TF2-Keras-Multi-GPU-Sagemaker-Training: DistilBert TensorFlow 2.1.0 Keras Multi GPU Sagemaker Training Job

Multi-GPU and distributed training using Horovod in Amazon SageMaker Pipe mode | AWS Machine Learning Blog

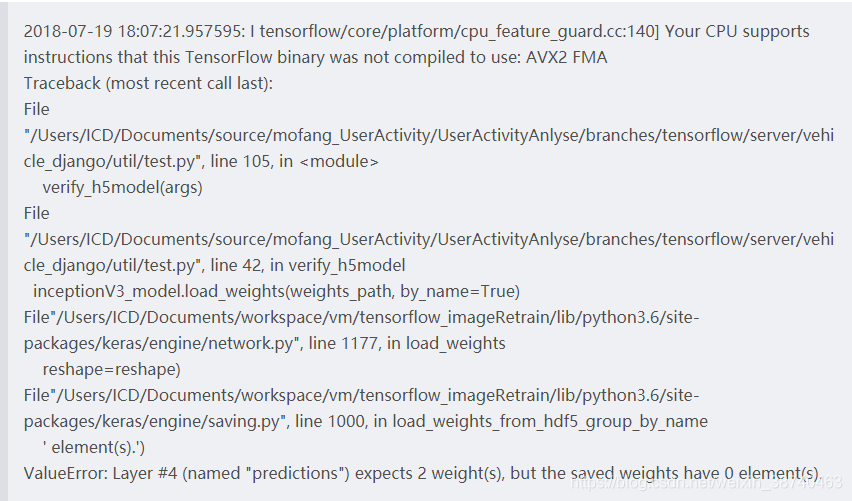

Keras multi-gpu training model weights file can not issue a single cpu or gpu machines used - Code World